Directions for Teaching and Learning

AI tools present an opportunity for meaningful discussions about academic integrity with students. At NIC, we recognize that AI can be used ethically to enhance teaching and learning experiences. The use of AI for assignments or assessments does not inherently constitute academic misconduct (Eaton, S., 2023. 6 Tenets of Post-plagiarism). Instructors are encouraged to explicitly outline the permission levels for AI use in their course outlines and to engage students in open conversations about these technologies. These discussions support the development of digital literacy by addressing both the risks and benefits of AI tools.

If an instructor specifies that no external assistance is permitted for a graded assignment, NIC will consider any unauthorized use of GenAI tools a form of academic misconduct, as outlined in the NIC Academic Integrity Policy. Policy provisions include the review of academic work for authenticity and originality and define inappropriate use of digital technologies as misconduct.

It is critical for instructors to provide clear, individualized statements in their course outlines regarding AI use related to course outcomes and assessments/assignments and graded evaluations. Indicate clearly if AI can or can’t be accessed for idea generation, for editing, for organization, for studying etc. so both you and students know the boundaries to work within. If academic misconduct involving AI is suspected, instructors should follow NIC’s established academic integrity procedures.

AI offers interesting possibilities but also raises important questions about meaningful learning, how learning is reliably measured, and how assessments might be redesigned to focus more on learning processes rather than just the final product. While shifting to authentic assessments can help address potential misuse of =AI, it is also likely that authentic assessments will evolve to include collaboration with or the integration of AI into student work in the future.

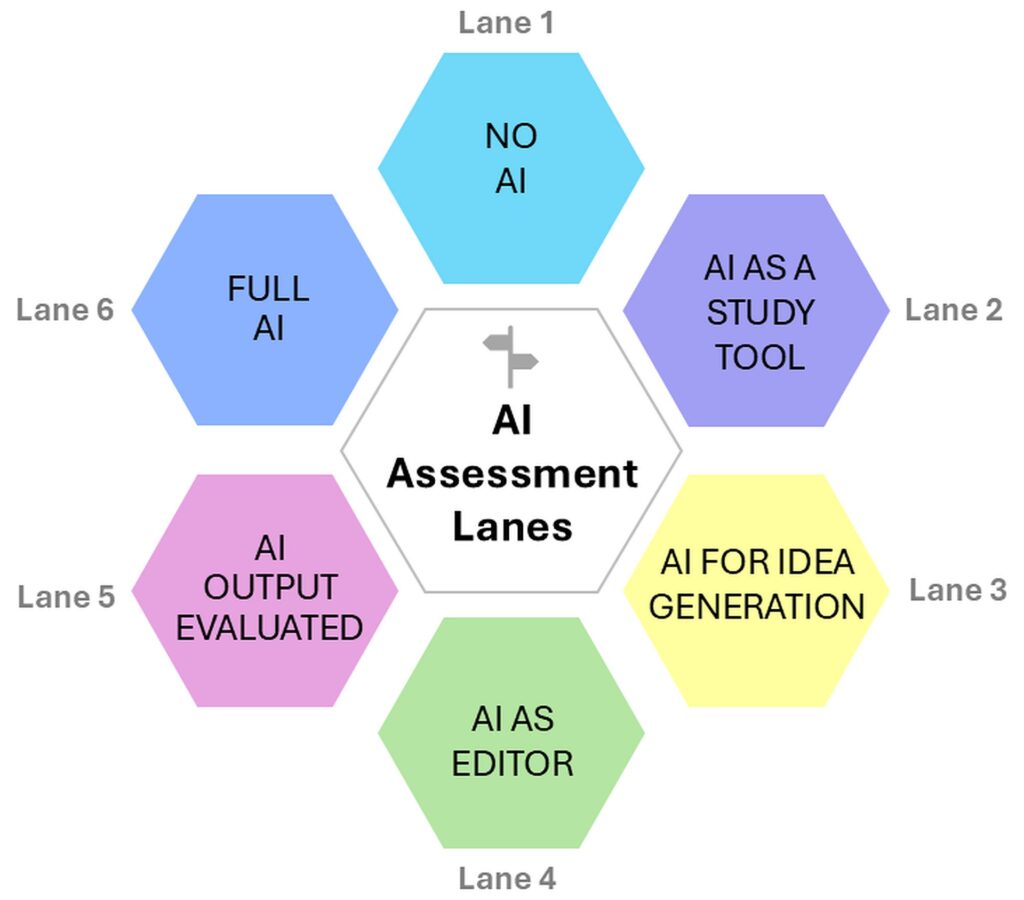

At NIC, it is essential to outline expectations regarding the use of AI tools in the course outline. These expectations should align with other course policies and be reinforced both in writing and through discussions with students throughout the term. Providing a rationale for how these guidelines support course learning outcomes fosters alignment, transparency, and understanding. See the NIC AI Assessment Scale for language per each assignment as to what ‘lane(s)’ of GenAI is permitted and where no GenAI is assigned. Also, visit the Providing Clarity and Alignment: Course Outline Chart page for further details on how to align graded course work and the use of GenAI with course learning outcomes.

See examples below:

AI Tools Not Permitted (Lane 1)

OPTION 1: Use of artificial intelligence tools such as ChatGPT, Claude, Copilot, NotebookLM and many other tools to complete graded coursework (assignments, exams, projects, etc.) in this course is not permitted in any circumstances. For the purposes of this course, the use of GenAI tools for any part or process completing graded coursework (including editing, idea generation, organization, format development etc.) will be considered academic misconduct as it violates the principle of student work being authentic and original.

OPTION 2: The use of artificial intelligence tools is not permitted in any graded course assignments unless explicitly stated otherwise by the instructor. This includes ChatGPT, Copilot, Gemini and other AI tools and programs.

GenAI Tools Permitted as a Study Tool (Lane 2)

Students are permitted to use AI tools as a study tool for coursework only, such as using it to tutor or prepare practice questions, but may not be used to create content for any assessed work or final submission. However, students are ultimately accountable for the work they submit. Any content generated or supported by an artificial intelligence tool must be evaluated for accuracy and cited appropriately. Your instructor will provide further information in class. If you have questions about this, please speak with your instructor.

AI Tools Permitted as an Idea Generator (Lane 3)

Students are permitted to use AI tools for the idea stages of coursework only, such as gathering information or brainstorming, but may not be used to create content for any assessed work or final submission. However, students are ultimately accountable for the work they submit. Any content generated or supported by an artificial intelligence tool must be evaluated for accuracy and cited appropriately. Your instructor will provide further information in class. If you have questions about this, please speak with your instructor.

AI Tools Permitted as an Editor (Lane 4)

Students are permitted to use GenAI tools as an editor and proofreader for coursework only, such as using it to check spelling, sentence structure, grammar etc., but may not be used to create content for any assessed work or final submission. However, students are ultimately accountable for the work they submit. Any content generated or supported by an artificial intelligence tool must be evaluated for accuracy and cited appropriately. Your instructor will provide further information in class. If you have questions about this, please speak with your instructor.

AI Tools Permitted as AI Output Evaluated (Lane 5)

Students are permitted to use AI tools to produce outputs that are then evaluated and compared. However, students are ultimately accountable for the work they submit. Any content generated or supported by an artificial intelligence tool must be evaluated for accuracy and cited appropriately. Your instructor will provide further information in class. If you have questions about this, please speak with your instructor.

AI Tools Permitted as Full AI Use (Lane 6)

Students are permitted to use AI tools for all aspects of coursework such as a study tool, idea generator, editor, outputs evaluated and creation of final product for submission. However, students are ultimately accountable for the work they submit. Any content generated or supported by an artificial intelligence tool must be evaluated for accuracy and cited appropriately. Your instructor will provide further information in class. If you have questions about this, please speak with your instructor.

Examination and rethinking of assessments is critical Instructors may be using assessments that are vulnerable to students using AI in a different lane from the one designated for the assessment. For example, using AI to co-author portions of an essay when AI has been prohibited from use for that assessment. This process, which can be done independently or in collaboration with NIC’s Centre for Teaching and Learning Innovation (CTLI), helps determine whether a redesign is necessary based on the potential vulnerabilities of the assessment or the future skills students need to develop.

Instructors can test their own assessments for vulnerability by trying to use AI to help complete them. Instructors should be mindful that some tools may use uploaded content (by students, by instructors etc.) to train their AI systems, and thus making the content publicly accessible. If redesigning assessments is needed, it is recommended to start small—focus on the assessment that poses the greatest challenge or has the highest impact. Start with an assessment redesign for one term, evaluate its effectiveness, and refine based on the experience.

If instructors provide permission for AI use in coursework, they should also ensure that students know how to appropriately acknowledge use of these tools. See NIC’s Library AI guide. You may also choose to have students provide an appendix to their work showing prompts and outputs. If students are not sure whether and how to acknowledge AI use in their academic work, they should check with their instructors.

NIC strongly advises against the use of AI detection tools on student work due to several legal, pedagogical, and practical concerns. Instructors must not upload student academic work or personal information to AI detectors that have not undergone a Privacy Impact Assessment (PIA) and received formal approval for use at NIC. At this time, no AI detection tools have been approved or are undergoing a PIA at NIC.

Uploading students’ personal information to unapproved services may violate the Freedom of Information and Protection of Privacy Act (FIPPA). Additionally, such actions could breach the Copyright Act, as students retain copyright ownership of their work.

Other concerns include:

- Accuracy and Reliability: AI detection tools are often inconsistent in identifying AI-generated content, with high rates of false positives that can mistakenly flag original student work. False accusations can lead to undue stress, reputational harm, and disputes that are difficult to resolve due to the opaque nature of detection algorithms.

- Bias: These tools may disproportionately flag work by non-native English speakers, raising equity issues.

- Evasion: Detectors can be easily fooled, making their findings unreliable.

- Rapid Advancement: AI technology evolves quickly, and detection tools struggle to keep pace.

- Transparency: Most tools lack the ability to explain how or why content is flagged as AI-generated, leaving students with little recourse to challenge incorrect assessments.

Currently, NIC does not plan to purchase or support AI detection tools institutionally, aligning with practices at many other post-secondary institutions. Faculty are encouraged to explore alternative approaches, such as designing assessments that emphasize process and originality, to uphold academic integrity in their courses.

Because NIC has not yet completed or approved any Privacy Impact Assessments (PIAs) for GenAI tools, NIC instructors cannot require students to create accounts with GenAI tools or use GenAI tools that may collect their personal information, whether through student or instructor inputs.

BC Freedom of Information and Protection of Privacy Act (FIPPA)

This act is provincial legislation that concerns the public’s right to access information held by public bodies, and the protection of individuals’ privacy. FIPPA provides the authorization for how public bodies may collect, use, and disclose personal information. As a law, it is illegal for a public body to collect, use, and disclose personal information in a way that is not authorized by FIPPA.

Privacy Impact Assessments (PIAs) are a legislative requirement of FIPPA for any initiative that involves the collection, use, and disclosure of personal information. PIAs assess the tool’s compliance with FIPPA and evaluate any privacy and security risks.

Personal information (PI)

Personal information (PI) refers to recorded information about an identifiable individual, excluding contact information used for business purposes. NIC is committed to protecting the privacy of all faculty, staff, and students and is required to act in accordance with the Freedom of Information and Protection of Privacy Act (FIPPA). Examples of PI include students’ names, personal contact information, academic history, student numbers, and financial information. Additionally, student assignments may contain PI about their lived experiences and may also constitute their intellectual property.

Collecting Personal Information Under FIPPA

The most common reason for collecting PI under FIPPA is when the information is necessary and directly related to a program or activity of the public body. If NIC collects PI from students—whether directly or through third-party tools—for assignments or activities necessary to achieve the learning outcomes of a course, NIC is responsible for ensuring that all potential collection, use, and disclosure of PI complies with FIPPA.

If students are required to use tools or services that collect PI, these tools must have undergone an approved Privacy Impact Assessment (PIA) to confirm their compliance with FIPPA. Without an approved PIA, NIC cannot guarantee compliance and therefore cannot mandate students to use such tools.

What Personal Information Does GenAI Collect?

AI tools and services often collect PI from users. At a minimum, account creation requires enough data to associate an individual with their account, such as name and email address. Depending on the tool and payment model, additional demographic data and payment information may also be collected.

Even if a user account is not required, AI tools may collect other data, depending on their terms of service. Examples of collected data include:

- Log Data: IP address, date/time of use, browser settings.

- Usage Data: Country, time zone, content requested/produced.

- Device Data: Information about the user’s device.

- Session Data: Interactions during the use of the tool.

Any personal information voluntarily entered into a AI tool may also be collected. This data may be stored, used for further training of the AI model, or even sold to third parties for marketing or other purposes. Additionally, much of this data is often stored outside Canada, raising further privacy concerns.

NIC faculty and staff are encouraged to ensure any AI tools used in learning activities comply with FIPPA requirements to uphold student privacy and intellectual property rights.

AI tools rely on content drawn from extensive datasets used during their training. Many resources in these datasets may be protected by copyright and may not have been shared with or approved for use by the AI tool. This can result in outputs that infringe on copyright. For example, an AI tool may generate content derived from a journal article that was uploaded by a user without the proper permissions. Such outputs could constitute copyright infringement of the original source material.

Copyright Infringement and Fair Dealing

The relationship between copyright law and fair dealing in the context of AI remains unclear. Additionally, Canadian copyright law currently states that copyright applies only to works created by humans. This raises questions about who owns the copyright of materials generated by AI. Given the varying degrees of human input involved in using AI tools, the determination of authorship and ownership for these works is yet to be clarified under Canadian law.

Educator and Student Intellectual Property

Educators and students should be cautious when inputting their own intellectual property, such as teaching materials or academic work, into AI tools. Data or content entered into these tools may be used for further training of the AI system or could be shared beyond the user’s control.

Third-Party Intellectual Property

Uploading third-party materials, such as journal articles, textbooks, or teaching resources, into AI tools without proper authorization may constitute copyright infringement. To avoid this, ensure that:

- You have explicit permission from the copyright holder, or

- The use of the material qualifies under Canada’s Fair Dealing provisions.

Implications for Open Educational Resources (OER)

Using GenAI to create Open Educational Resources (OER) introduces additional complexities regarding copyright and intellectual property. These implications are still being explored and determined.

For more information on AI and copyright issues, NIC faculty, staff, and students are encouraged to consult institutional resources or reach out to the NIC Library for guidance.

The use of AI tools in teaching and learning opens new possibilities but also raises significant ethical considerations. Awareness of these factors is crucial to ensure that AI is used responsibly and in alignment with NIC’s values. Understanding these risks also supports the development of AI literacy, helping educators and students make informed decisions about when and how to use these tools.

Bias and Discrimination:

AI tools generate content based on extensive datasets, which often include societal biases (e.g., racism, sexism, ableism). These biases can be reflected in the tool’s outputs, perpetuating inequalities and discrimination. This issue has been documented in both text and image generation.

Data Collection:

AI tools require large amounts of data to function, including potentially sensitive personal or copyrighted information. There is a risk of misuse, data breaches, or unauthorized data sharing.

Lack of Human Interaction:

While AI can enhance personalized learning, it cannot replace meaningful human interactions, which are essential for social and emotional development in educational settings.

Unethical Labor Practices:

The development of AI tools often relies on low-paid labor, particularly in the Global South, to train models and moderate content, raising concerns about exploitation.

Constantly Changing Policies:

AI platforms frequently update their terms of service, privacy policies, and intellectual property rules, requiring users to stay informed about these changes.

Hallucinations and Unreliable Content:

AI models generate content predictively rather than accurately. This means they can produce fabricated or inaccurate information, posing risks when such outputs are mistaken for truthful or reliable.

Indigenous Knowledge and Relationships:

AI tools may pose risks to Indigenous data sovereignty and cultural protocols, including cultural appropriation and perpetuating stereotypes. They may not respect the First Nations Principles of OCAP® (Ownership, Control, Access, and Possession) or Indigenous intellectual property rights.

Environmental Impact:

Training and operating AI models consume significant resources, including electricity and water. For example, generating a single image may use as much energy as charging a smartphone, while an AI search can consume 4–5 times the energy of a traditional web search.

Privacy Invasion Through

Re-Identification:

AI can re-identify individuals in anonymized data, potentially leading to privacy violations.

Equity in Access:

Access to AI tools depends on technology, reliable internet, and digital literacy skills. Barriers such as geographic location, costs, or accessibility challenges can limit equitable use, particularly for users with disabilities.

Misuse of Generated Content:

AI can be exploited to create fake news, deepfakes, or impersonations, leading to ethical concerns around disinformation and misuse.

Ownership and Control of Generated Content:

Determining ownership of AI-generated content remains a gray area, raising questions about intellectual property rights and usage.

Risk to Critical Thinking and Creativity:

Over-reliance on AI for tasks like reading, writing, or idea generation may undermine critical thinking and creativity.

Despite the considerations above, GenAI tools offer significant potential to enhance teaching and learning.

Personalized Learning:

AI can adapt learning experiences to individual needs, styles, and interests, offering a tailored educational experience.

Enhanced Productivity:

These tools streamline curriculum development, content creation, and research, freeing up educators to focus on student engagement and relationship-building.

Accessibility:

AI supports accessibility by enabling features like text-to-speech, speech-to-text, automatic captions, alt-text generation, translation, and audio descriptions. It can also enhance Universal Design for Learning (UDL) and support neurodiverse learners.

Democratization of Knowledge:

By reducing the need for advanced technical skills, AI provides more equitable access to information and creative tools.

Creativity:

AI enables rapid generation of creative and original content, encouraging exploration and innovation in learning activities.

Improved Decision-Making:

AI’s ability to analyze data and detect patterns can aid in diagnosis, predictions, and the design of effective learning strategies.